UX Designer Leanne Dobson rounds up the pros and cons of voice technology and what you should consider when designing for voice interaction.

Since 2012, there have been improvements in natural language processing and reduced error rates. Large companies such as Amazon and Google are pushing voice as ‘the next big thing’.

Currently, voice technology is largely employed for personal use in the home but in future we might see increased use in business and commercial contexts. For example, when trying to set up a conference call, imagine how great it would be to say a few simple instructions instead of faffing around with cables, conference phones and Outlook links.

Defining screen-first and voice-first interactions

Screen-first: mobiles, tablets and televisions enhanced with voice control.

Voice-first: smart speakers such as Echo and Google Home that have now been upgraded with screens, such as the Echo Show.

Top personal uses of voice-first technology

Sometimes the primary use of smart speakers seems to be to trigger BLIND CURSING when they:

- don’t understand what you are trying to say (a frequent occurrence I find)

- randomly go off for no reason (this happened to me during a Skype interview – very embarrassing!)

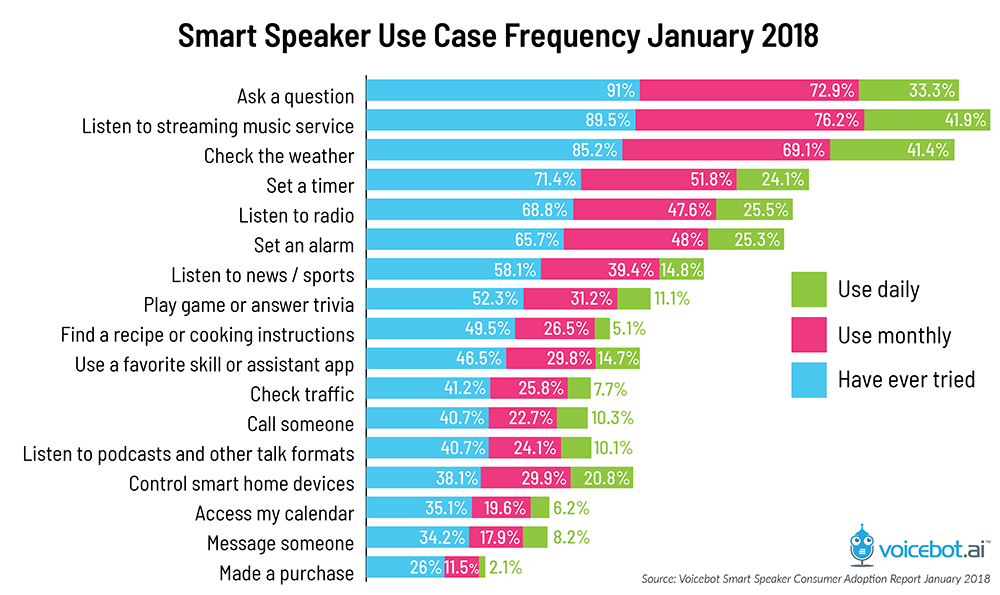

But actually, the top personal uses are:

- asking a question

- listening to music

- checking the weather

- setting alarms and reminders

Source: https://www.voicebot.ai/amazon-echo-alexa-stats/

Pros and cons

Pros

Voice-first is an efficient input modality that allows for multitasking and bypassing complex navigation menus for familiar tasks.

Screen-first is an efficient output modality where large amounts of information can be displayed to help reduce the burden on memory and convey statuses and visual signifiers for commands. This is what the Echo Show does (however it is rather random).

Cons

Integrating voice and screen into a single system is challenging and single-use devices tend to have a short lifespan. In a lot of screen-first cases, such as Siri and Google Assistant, the voice is an afterthought, creating a fragmented user experience.

With touchscreen devices, there can be missing functionality, where voice initiates a process but subsequent steps require touch interaction. However, this is improving. When I ask Google Assistant to play a song on Google Play, it starts the requested song without further intervention at least 80% of the time.

My personal experiences of using the Echo Show include hearing my 3 year-old son slightly mispronouncing “Alexa”. This doesn’t trigger the device and results in him shouting louder. I’ve also observed my mother-in-law, a non-native English speaker, struggling to pronounce certain words to make herself understood by both Echo and Google Home.

There are also concerns about corporate surveillance and voice search history being hacked.

Designing for voice interaction with accessibility in mind

The shift to voice can deliver huge benefits, from allowing people with dyslexia to interact without written text, to bringing independence for those with motor function impairment. It is important to consider accessibility when designing voice interaction. Here are some suggestions.

Deaf and hard of hearing

- Provide multimodal interfaces that allow volume control.

- Offer alternatives to speech-only interactions.

Cognitive disabilities

- Create a linear architecture so the user doesn’t get lost in the navigation. This is especially important for voice-first.

- Provide context where terms could be confusing. Avoid ambiguous language.

- Use literal language with short and simple words.

- Avoid long menu options. Put the most important and frequently used choices first. (This will benefit all users.)

Physical disabilities

- Understand broken and shaky speech. This can also benefit non-native speakers.

- Offer alternatives to speech-only and design pauses in listening.

Vision impairments

- Limit voice interactions needed and keep them short allowing for interruptions.

- Offer ability to control the speech rate.

- Make it discoverable when using screen readers.

Voice and screen together

Dedicated single-use devices inevitably get used less as the novelty wears off. Handicapping screen functionality for voice-only interactions limits the device’s usefulness and increases user frustration and cognitive load.

It is impossible to remember all the voice-only commands, although the Echo Show has a screen that prompts you with suggestions. There is a need for a set of standardised command phrases and keywords to allow users to intuitively navigate between different devices and their AI assistants.

Screen-first has the advantage of being able to display large amounts of information on a screen, reducing cognitive load for the user. Voice and screen need to work in unison for a truly effective user experience.

<nbsp;>

<nbsp;></nbsp;></nbsp;>

Resources

https://www.nngroup.com/articles/voice-first/

https://uxdesign.cc/tips-for-accessibility-in-conversational-interfaces-8e11c58b31f6

https://uxdesign.cc/voice-user-experience-design-and-prototyping-for-mere-mortals-ef080c843640

https://developers.google.com/actions/design/conversation-repair